Like the ASYNC_IO_COMPLETION wait type, ASYNC_NETWORK_IO

pertains to throughput; however, it focuses on the throughput of the network

connection between your SQL Server instance and its clients, rather than the

storage subsystem. It's important to note that ASYNC_NETWORK_IO waits are

normal and do not necessarily indicate a network issue, as these waits can

occur even when querying the SQL Server instance directly on the server.

What is the ASYNC_NETWORK_IO Wait Type?

ASYNC_NETWORK_IO waits typically happen when client applications are unable to process query results quickly enough or when there are network performance issues. Most often, the former is the case, as many applications handle SQL Server results row-by-row or struggle with large data volumes, causing SQL Server to wait to send query results over the network. This waiting period is logged as the ASYNC_NETWORK_IO wait type. Additionally, ASYNC_NETWORK_IO waits can occur when querying remote databases via a linked server.

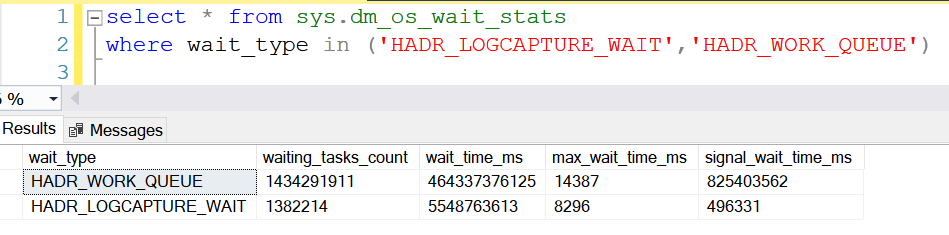

Sys.dm_os_wait_stats

You can check the ASYNC_NETWORK_IO wait type using the

sys.dm_os_wait_stats DMV. We might not require a complicated environment to

test the ASYNC_NETWORK_IO wait type.

Before running the DMV, lets clear the information from the DMV

with a simple DBCC command.

use master

go

DBCC SQLPERF('sys.dm_os_wait_stats', CLEAR);

Once the information is cleared from the DMV, lets run a simple

SELECT statement from the SQL Server instance using SSMS.

select * from DBA_Async_Test

After querying

from the above table, we can see a spike in the ASYNC_NETWORK_IO wait in

the sys.dm_os_wait_stats.

select * from sys.dm_os_wait_stats where wait_type='Async_network_io'

We saw a spike in the wait type because the SQL Server

Instance was not able to get all the result in the SSMS as fast as the instance

itself. When the instance is not able to get the results fast on to the SSMS,

we start to see more of this wait type.

How To Lower ASYNC_NETWORK_IO Waits?

The answer is pretty simple. To lower ASYNC_NETWORK_IO Wait,

we just must modify the query by limiting the result set as much as possible. Let’s

look at the simple example as to how we can lower the wait type.

We will be clearing the wait stat information from

sys.dm_os_wait_stats again to record the reading one more time.

use master

go

DBCC SQLPERF('sys.dm_os_wait_stats', CLEAR);

This time, we will be limiting the records from to take to just TOP 100.

select TOP 100 * from DBA_Async_Test

If you

notice that the ASYNC_NETWORK_IO wait has been minimized. The SSMS was

able to get the results fast enough and had not to wait for the completion of

the query for longer duration. We can also reduce the ASYNC_NETWORK_IO wait by

adding WHERE conditions to our query.

Note: If you still think that the above test case failed in your

scenario, then please check the network configuration. At times, there are

possibilities that the network configuration as well can slow down fetching the

result sets.

We can check the network configuration by opening the task manager and navigating on to the “Ethernet” option.

If you observe high network utilization along with increased ASYNC_NETWORK_IO wait times, the network might be the bottleneck. In such instances, consulting your network administrator could be beneficial. Network configurations are complex, involving various components such as switches, routers, firewalls, network cables, drivers, firmware, and possibly virtualized operating systems. Each of these elements can impact network throughput and contribute to ASYNC_NETWORK_IO waits.

Bottomline

ASYNC_NETWORK_IO

waits happen when an application requests query results from a SQL Server

instance over the network and is unable to process the returned results quickly

enough. While these waits are generally normal, unusually high wait times might

indicate changes in query results or network issues. To reduce application

related ASYNC_NETWORK_IO wait times, consider decreasing the number of rows

and/or columns returned to the application.

You may also refer :